Hand Gesture-Controlled Unitree Go2 Robot

Description

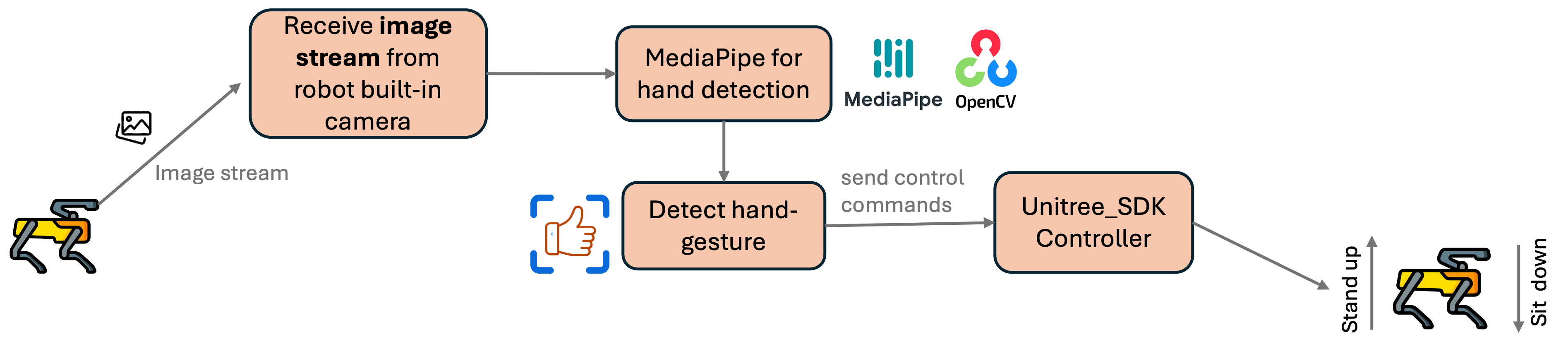

This project enables gesture-based control of the Unitree Go2 quadruped robot using a Python interface. The system leverages MediaPipe for real-time hand gesture recognition and OpenCV for image processing. The recognized gestures are used to command the robot to stand up or stand down, providing an intuitive and efficient way to interact with the robot.

Project Flowchart

Below is the flowchart illustrating the project’s workflow.

How It Works

The system follows these steps:

- The robot’s built-in camera streams video.

- The image stream is processed using OpenCV and MediaPipe.

- MediaPipe detects hand landmarks and classifies gestures.

- The detected gestures (thumbs up/down) are mapped to control commands.

- The commands are sent to the Unitree Go2 robot through its SDK.

- The robot responds by standing up or standing down.

Hand Gesture Recognition

The system uses MediaPipe to detect and analyze hand gestures. Each frame from the robot’s front camera is processed in real-time to identify hand landmarks and classify gestures.

The gesture recognition model specifically detects the position of the thumb to differentiate between two commands:

- Thumbs Up → The robot stands up.

- Thumbs Down → The robot sits down.

Real-Time Testing

The following video demonstrates the system in action, where the robot responds to hand gestures in real time.

Code Overview1

The core functionality is implemented in Python, utilizing the unitree_sdk2py library to send movement commands to the Unitree Go2 robot. Below is a high-level breakdown of the code:

- Video Stream Processing

- Captures video frames from the robot’s built-in camera.

- Converts frames into a format suitable for hand recognition.

- Uses MediaPipe to detect hand landmarks.

- Gesture Recognition

- Detects the thumb tip position.

- Determines whether the thumb is up or down.

- Robot Control

- Sends control commands via the Unitree SDK based on the detected gesture.

For a detailed look at the implementation, visit the GitHub repository:

GitHub Repository

Future Improvements

Some potential enhancements for this project include:

- Adding more hand gestures for extended functionality.

- Integrating object recognition alongside gesture control.

- Improving robustness in various lighting conditions.

This project demonstrates an intuitive way to control a quadruped robot using natural hand movements, making human-robot interaction more seamless and accessible.

Acknowledgment

This project was inspired by the amazing work of Ava Zahedi, who developed a hand gesture-based control system for the Unitree Go1 robot. I want to express my gratitude for making the project publicly available, as it served as a valuable reference in building this system.

I highly encourage everyone to check out Ava’s project here:

Gesture-Based Quadruped Control by Ava Zahedi